Introduction: Why SAT Scoring Feels Like a Puzzle

If you’ve ever stared at an SAT score report and felt a little dizzy, you’re not alone. Scores that look like neat numbers — 720, 1320, 1540 — carry a tangle of ideas behind them: percentiles, subscores, scaled scores, and terms like “equating” that sound like algebra class and don’t help much with college applications.

This blog is a friendly tour through the most commonly misunderstood parts of SAT scoring. I’ll explain what those numbers actually mean, demystify the things that cause stress but shouldn’t, and give practical steps you can take to improve your score reliably. Along the way I’ll mention how targeted resources like Sparkl’s personalized tutoring (1-on-1 guidance, tailored study plans, expert tutors, and AI-driven insights) can fit naturally into a smart preparation plan — when appropriate, not as a sales pitch.

Myth #1: There’s a “Curve” That Favors or Punishes You

People talk about “the curve” like the SAT is a popularity contest. The truth is less spooky: the SAT doesn’t use a secretive curve that boosts or crushes your raw points on whim. Instead, the College Board uses a process called equating to make scaled scores comparable across different test administrations.

Think of equating as a fairness adjustment. If Test A is slightly harder than Test B, a raw score on Test A gets mapped to a slightly higher scaled score so a student’s scaled score reflects ability rather than the luck of the test form. That means if you score 650 on a March test and someone else scores 650 on an October test, you’re essentially measuring the same performance level even if the questions were different.

Why this matters for you

- Don’t try to “game the curve” — it’s not something a student can control.

- Take full, official practice tests from the College Board; they’re equated to the real test and help set realistic expectations.

Myth #2: Your Raw Score Is the Score That Counts

Raw score is the number of questions you answered correctly. It’s the starting point, but colleges don’t see raw scores. They see scaled scores: each section (Evidence-Based Reading and Writing, and Math) is scaled from 200–800, and those two are combined for a 400–1600 composite.

Why scale? Because of equating (above). The conversion from raw to scaled changes session to session so the same raw score can map to different scaled scores depending on test difficulty. That’s why a practice test raw score gives you a sense of performance, but your scaled score is the official yardstick.

Illustration (Hypothetical)

Imagine you get 40 out of 52 Reading questions and 30 out of 44 Writing & Language questions. Those raw numbers combine into the Evidence-Based Reading and Writing raw total, then map to a scaled EBRW score. The details of mapping vary, so don’t assume a fixed conversion.

Myth #3: Guessing Hurts — Leave Blank Instead

This one dates back to older versions of the SAT. Today, there is no penalty for wrong answers. That means guessing is strictly better than leaving an answer blank if you can narrow options.

- Eliminate one or two choices first — educated guesses improve expected value.

- Answer every question. An unanswered question has zero chance of being right.

How the SAT Is Structured — A Quick Map

Knowing what’s scored and how it’s reported reduces a lot of worry. Here’s the current structure in a nutshell:

- Two main section scores: Evidence-Based Reading and Writing (EBRW) and Math. Each is 200–800.

- Composite score: 400–1600 (sum of the two section scores).

- Test scores (10–40) for Reading and Writing & Language, used internally to derive section scores.

- Cross-test scores (10–40) for Analysis in History/Social Studies and Analysis in Science.

- Subscores (1–15) for specific skill areas like Command of Evidence, Heart of Algebra, Problem Solving and Data Analysis, etc.

- The optional essay, when offered, is scored separately and does not affect the 400–1600 score.

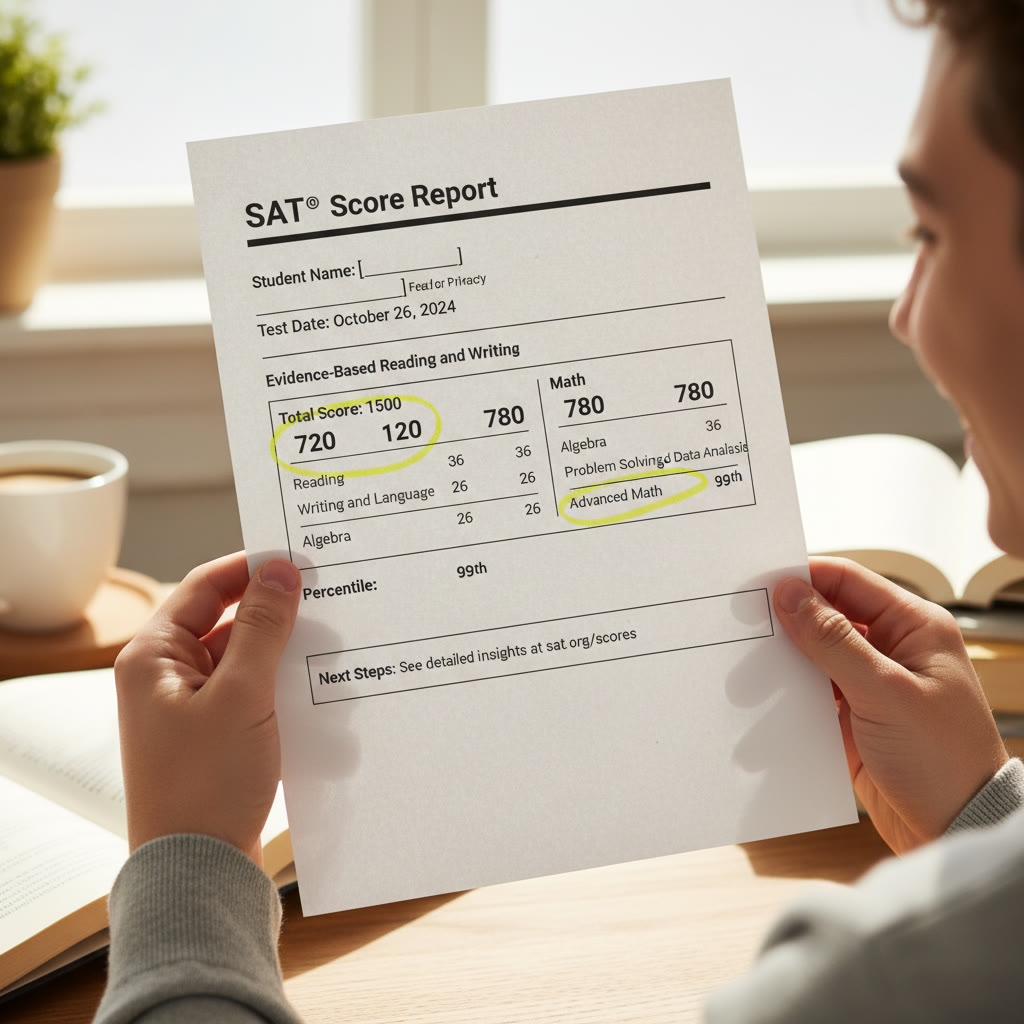

Subscores and Cross-Test Scores: Small Numbers, Big Insights

Those subscores that look like minor print on the page are actually gold. They tell you where you’re strong and where targeted practice will pay off. For example:

- Subscores (1–15) pinpoint skills: Words in Context, Expression of Ideas, Heart of Algebra, Passport to Advanced Math, and so on.

- Cross-test scores (10–40) measure how well you analyze data and evidence in history/social studies and science contexts.

Why this helps: if your overall Math score is stuck, a subscore might reveal that Heart of Algebra is the bottleneck. Work specifically on linear equations and that subscore — and your section score — will likely move.

Percentiles: What They Really Tell You

A score by itself is only half the story. Percentiles tell you how your score compares to other test takers. If you’re at the 85th percentile, you outperformed 85% of students in the reference group.

Important caveat: percentiles are updated periodically and reflect the performance of the group used in the College Board’s reference frame (recent cohorts of test takers). So a particular scaled score’s percentile can shift slightly over time.

Approximate Percentile Guide (Illustrative)

| Scaled Score (1600 scale) | Approximate Percentile |

|---|---|

| 1600 | 99+ |

| 1500 | 98–99 |

| 1400 | 95–97 |

| 1300 | 85–90 |

| 1200 | 70–75 |

| 1100 | 55–60 |

| 1000 | 40–45 |

| 900 | 20–30 |

| 800 | 8–12 |

Keep in mind: these percentiles are illustrative. Use them to understand relative standing, but always check the percentile shown on your official score report for the exact context.

Reliability and the Standard Error of Measurement (SEM)

No test is a perfect snapshot. If you took the SAT today and again next month without changing your knowledge, your score might vary slightly. That natural fluctuation is described by the standard error of measurement (SEM).

For the SAT, SEM on the total score is commonly in the range of roughly 20–40 points. What that means in practice: a score of 1300 might reasonably represent a “true score” somewhere within a band around that number. This band is not magic — it’s a statistical reminder that small differences in score aren’t always meaningful.

How to use SEM practically

- Don’t panic over a 10–20 point change between administrations — it could be noise.

- Look for sustained improvement across several tests and practice exams.

Superscoring and Score Choice — What’s the Difference?

Two policies students often mix up:

- Score Choice (a College Board feature): you can choose which test dates to send to colleges.

- Superscoring (college policy): some colleges combine your highest section scores across multiple test dates to make a new composite.

Check college policies on superscoring; many schools do superscore, but policies vary by institution. If a college superscores, it can make sense to focus on improving one section at a time rather than retaking the whole test for small gains across both sections.

Raw-to-Scaled: A Table Example (Illustrative Only)

To make the abstract concrete, here’s a hypothetical mapping from raw scores to section scaled scores. This is illustrative — actual mappings change by test and year.

| Raw Correct (EBRW out of 96) | Scaled EBRW (200–800) |

|---|---|

| 88–96 | 760–800 |

| 76–87 | 700–750 |

| 60–75 | 640–690 |

| 45–59 | 560–630 |

| 30–44 | 420–550 |

And for Math (out of 58):

| Raw Correct (Math out of 58) | Scaled Math (200–800) |

|---|---|

| 52–58 | 760–800 |

| 44–51 | 700–750 |

| 34–43 | 620–690 |

| 22–33 | 520–610 |

| 10–21 | 350–510 |

Again: these are examples to show the concept. The bottom line is simple: small raw improvements can translate into big scaled jumps near some thresholds, and sometimes the same raw improvement yields different scaled gains depending on where you start.

Common Misinterpretations That Hurt Strategy

- “I missed a question, so my whole section is ruined.” Not true. One mistake doesn’t collapse your score.

- “I got the same raw score on two tests but different scaled scores — something’s wrong.” No — that’s equating doing its job.

- “My practice test score was X, so I’ll definitely get Y on the real thing.” Practice tests are excellent predictors, but environmental factors, test-day nerves, and question variation mean practice is an estimate, not a guarantee.

Practical Steps: Where to Focus if You Want Higher Scores

Understanding scoring helps you target study. Here’s a prioritized, realistic plan:

1) Diagnostic + Targeted Plan

Take a full, timed official practice test to see your baseline. Use your subscores to identify 2–3 clear targets (e.g., Heart of Algebra and Command of Evidence).

2) Smart Practice, Not Just Volume

- Do focused drills on question types that hurt you. If passage-based vocabulary trips you up, drill Words in Context.

- For Math, prioritize conceptual clarity. Too many students memorize tricks rather than building understanding.

3) Realistic Timed Practice

Practice under test conditions regularly. Improve pacing and test stamina. Your raw score on timed practice is the clearest indicator of progress.

4) Review Mistakes Like Data

Every error is data. Categorize mistakes — careless, conceptual, misread, or timing — and fix the root cause. Subscores help you decide when to pivot study focus.

5) Consider Personalized Help

When gains stall, personalized tutoring accelerates progress. Sparkl’s personalized tutoring can be especially helpful because it mixes expert tutors with tailored study plans and AI-driven insights to zero in on the exact question types and concepts that hold you back. A few targeted 1-on-1 sessions often clarify weak points faster than weeks of solo work.

Test-Day Tactics That Affect Scores More Than People Think

- Sleep and nutrition: cognitive performance hinges on it. Treat test day like a performance event.

- Know the test format cold. Familiarity cuts decision time and reduces stress.

- Manage easy questions first to secure points, then work up to harder problems.

When to Test and When to Retake

Timing matters. Plan tests so you have time after results to improve and retake if needed. If a retake helps, be strategic: focus on improving specific subscores rather than aiming for small across-the-board gains.

Some students benefit from a single broad improvement strategy; others get better returns from a surgical approach — e.g., two months focused solely on algebraic reasoning. Personalized tutors (like Sparkl’s experts) can help design that plan and track progress with practice test data.

Final Notes: What Scores Can and Can’t Tell You

Your SAT score is a useful, standardized snapshot of certain college-ready skills, not a full measure of potential. Admissions officers use scores as one factor among many, and scores tell you concrete things you can improve with practice.

Practical recap:

- Scaled scores are what matter; raw scores are the input.

- Equating ensures fairness across test forms; it’s not a curve to outsmart.

- Guess smartly — no guessing penalty.

- Subscores and cross-test scores are your actionable roadmap for improving where it counts.

- Small score differences near cutoffs might not be meaningful because of measurement error.

Closing Thought: Learn the Rules, Then Play the Game Well

Understanding scoring turns anxiety into strategy. Once you know the mechanics — scaled vs. raw, equating, percentiles, subscores — the SAT becomes a practice problem you can methodically solve rather than a mysterious gatekeeper. If you want to move faster, targeted help like Sparkl’s personalized tutoring (1-on-1 guidance, tailored plans, and AI-driven insights) often helps students focus on the right weaknesses and convert effort into score gains efficiently.

Good luck — and remember: a well-planned month of focused, intelligent work will often beat a frantic last-minute cram. Score reports are tools; use them to plan, practice, and improve.

No Comments

Leave a comment Cancel