Why this matters: The ripple effect of a single misleading SAT stat

It starts small: a parent posts “Average SAT scores dropped 40 points in our state!” in the PTA group. A counselor forwards an email with a pie chart that looks official. A guidance counselor hands out a one-page summary of last year’s averages that seems to favor a particular class of students. Within hours, students are stressed, families are calling tutors, and application strategies shift—sometimes in ways that don’t make sense.

Spotting fake or misleading SAT statistics is more than trivia. Those numbers can influence study plans, fee waiver decisions, scholarship expectations, and even which colleges a student believes are within reach. In this piece I’ll walk you through simple, practical ways to evaluate SAT claims, how to read College Board data correctly, and what to say (and do) when someone shares a too-good—or too-alarming—statistic.

Truth #1: Not every chart equals evidence

People love charts. They make complex information feel tidy and convincing. But pretty visuals can hide sloppy or deceptive methodology. A bar chart that compares ‘‘Average scores’’ from different years may look persuasive, but unless you know who is included (all test takers? only juniors in school-day administrations? a subgroup?), the chart can be meaningless.

Quick sniff-test questions

- Who gathered the data? (Is it College Board, your district, or an anonymous spreadsheet?)

- Who is included? (All students? Only those who opted in? Only those who used test accommodations?)

- What metric is being shown? (Mean, median, or percentiles—these can tell very different stories.)

- Are the axes labeled clearly and honestly? (If the vertical axis doesn’t start at zero, differences can be exaggerated.)

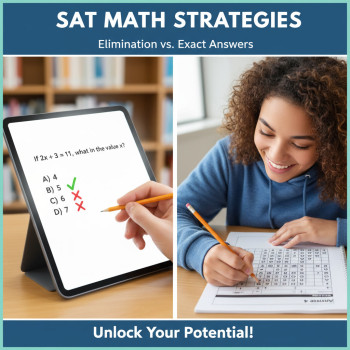

Truth #2: Know the most useful SAT measures—and the tricks people use

College Board reports several measures: total scores (400–1600), section scores (200–800 for Math and Evidence-Based Reading and Writing), and percentiles (which compare a score to other test takers). Here’s how these measures can be misused.

Common manipulations and what they mean

- Selective averages: Someone might report the “average score for students who took a prep class” and present it as the school average. That’s apples vs. apples only if everyone had the same opportunity to take that class.

- Cherry-picked cohorts: Quoting the average for ‘‘students who applied early’’ without clarifying that early applicants can differ in important ways (e.g., motivated, from wealthier districts).

- Percentiles used out of context: ‘‘You’re in the 40th percentile’’ sounds bad until you realize it compares to all U.S. test takers or a particular year with different participation rates.

How to verify an SAT statistic in five practical steps

When you see a headline claim or a shared graphic, run through this checklist before changing plans, paying money, or worrying your student needlessly.

1) Ask for the source—specifically

General answers like “the district” are a start, but a trustworthy claim should point to a named report or a College Board publication. If the source is missing, treat the statistic as suspect.

2) Check what population is being measured

Is the stat about a state, a school, only juniors who took SAT School Day, or all weekend test takers nationwide? Those populations aren’t interchangeable. For example, school-day testing often includes a broader, more representative group of students than weekend administrations, which can skew averages higher if weekend test takers are self-selected.

3) Look for the metric definition

Is that ‘‘average’’ the mean or the median? Does the chart show a shift in percentiles or in raw score points? A 10-point change in mean may be statistically insignificant; a shift in a percentile (e.g., 60th to 50th) might matter more for college selection.

4) Check timing and sample size

A claim about a single testing date can’t necessarily be extrapolated to a trend. Small sample sizes (like a graduating class of 40) make averages noisy—one high or low outlier moves the mean a lot.

5) Compare to primary sources

The College Board publishes authoritative score reporting and guidance. If a statistic sounds radical—big drops, sudden spikes, unusual subgroup claims—compare it to official score releases and school-day or state reports. If the numbers don’t line up, ask more questions before acting.

Example: How a misleading headline can change a family’s plan

Imagine a community newsletter says: “Our district SAT scores dropped 35 points—apply to more safety schools.” That headline compresses a lot: which average? Which group? Over what period? If parents accept it at face value, juniors may shift to an overly conservative list, miss scholarship deadlines, or spend money on last-minute test prep that won’t change their admission chances as much as a strong essay would.

A clearer approach

- Request the full data: Was that 35-point drop the mean for all students or just the top quartile?

- Check whether test participation changed: If more students took the exam this year, averages often move.

- Look at percentiles: Did the percentile distribution change, or is it just a small shift in mean?

Table: Simple ways to interpret common SAT claims

| Claim | Possible truth | Quick check |

|---|---|---|

| “Average SAT score in our state fell 30 points” | Could reflect higher participation or reporting differences rather than lower achievement. | Ask for population, year-to-year participation rates, and whether it’s mean or median. |

| “Students who took Course X score 1400 on average” | May be true for that cohort; not causal—students who choose that course may already be stronger. | Request baseline scores and how students were selected for Course X. |

| “Our school is in the top 10% nationally” | Depends on whether the comparison uses the same test administration and excludes non-tested students. | Confirm the benchmark (national percentiles) and which administration (school-day vs weekend). |

Interpreting College Board data the smart way

The College Board is the primary source for SAT reporting. Their score reports include total scores, section scores, percentiles, and explanations. Here are practical tips for parents and students when you review College Board materials or when someone quotes from them.

What percentiles tell you (and what they don’t)

Percentiles show how a student performed relative to other test takers. A 70th percentile means the student scored the same as or better than 70% of the comparison group. But beware: percentiles are sensitive to who’s included in that comparison group and which year’s population is used. Always check which group the percentile references.

Why section-level detail matters

A 30-point fluctuation in total score could be concentrated entirely in Math or Reading & Writing. That distinction matters for course choice, scholarship eligibility tied to certain sections, and targeted study plans.

Red flags that usually mean a statistic is misleading

- No identified source or a vague source like “an anonymous spreadsheet.”

- Very small sample sizes presented as broad trends.

- Mixing different testing populations (e.g., comparing district school-day results to national weekend results).

- Use of averages without reporting spread (standard deviation) or percentiles.

- Sensational language—”plummeted” or “skyrocketed”—without context or clear numbers.

Practical scripts: What to say when someone shares a suspicious statistic

It’s okay (and smart) to ask for clarification. Here are short, respectful responses you can use in a PTA chat, an email, or in person.

- “Thanks for sharing—do you know which report this comes from? I’d love to read the full context.”

- “Is that the mean or the median, and which students are included? Weekend vs. school-day testing makes a difference.”

- “That seems surprising—do you have the sample size or year-to-year participation rates?”

When numbers are real: How to translate them into action

Not all accurate statistics require panic. Here’s how to respond constructively when you verify a valid trend or figure.

If scores dipped slightly

- Look for patterns: did one section drop more than the other? Is the dip concentrated in one school or grade?

- Address the cause: more test day anxiety? fewer practice opportunities? Then prioritize targeted practice and test-day strategies, not a wholesale change in college list.

If a subgroup shows large variation

- Evaluate equity: Are certain students missing access to prep, quiet testing spaces, or accommodations?

- Find targeted supports: tailored tutoring, school resources, or refined study plans can be far more effective than panic-driven last-minute prep.

Real-world examples of responsible reporting

Responsible school or district reports include: the population tested, dates, whether the data are from school-day or weekend administrations, sample size, and a note about how averages were computed. If you receive a one-page summary that omits these items, ask for the full report or a follow-up conversation with a counselor.

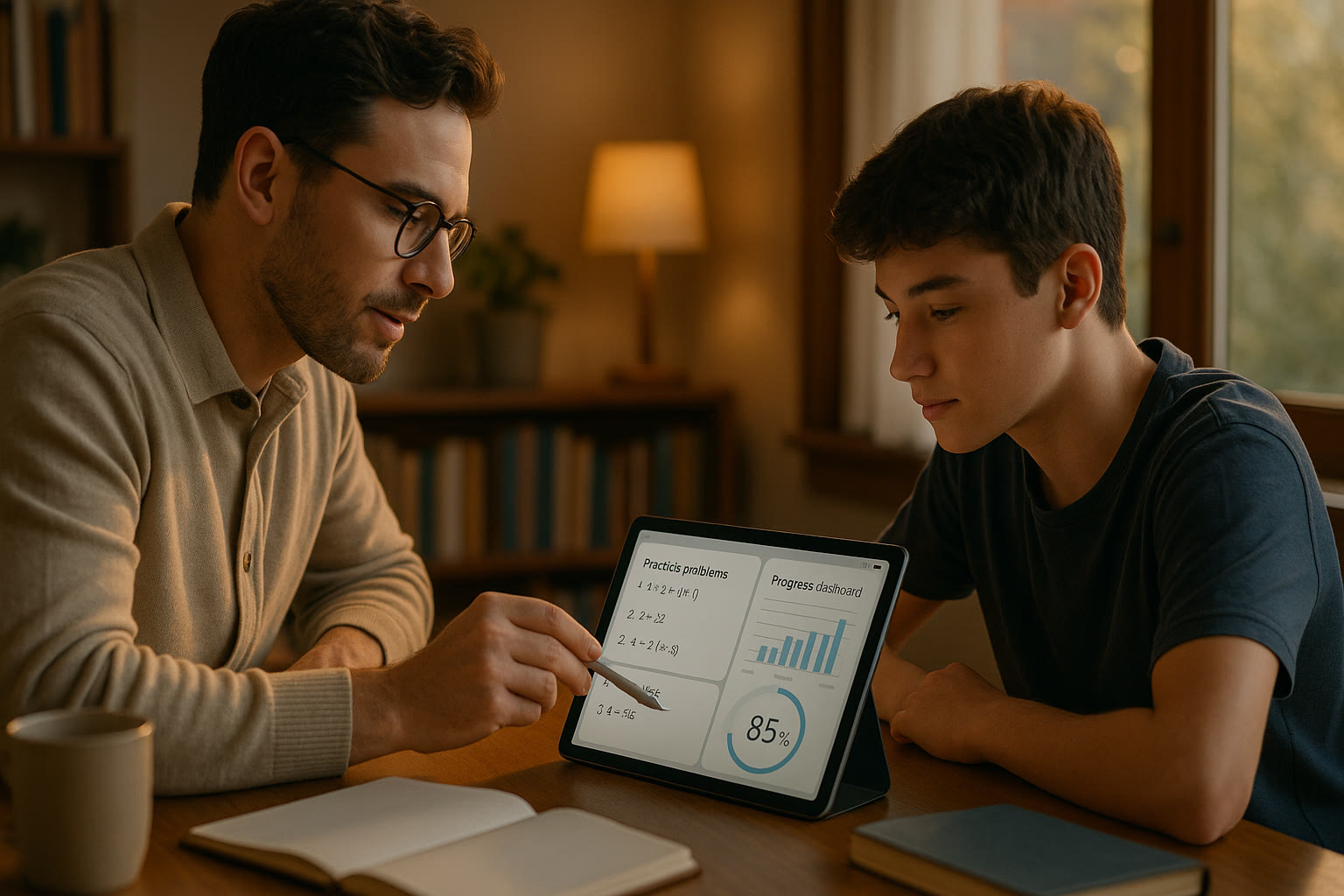

How tutoring and test prep fit into an evidence-based response

When a verified statistic indicates a real need—say, a particular school’s math scores are trailing state benchmarks—targeted support helps. This is where personalized tutoring shines. One-on-one guidance, tailored study plans, and expert tutors can address specific skills instead of chasing generalized score boosts. Programs that combine human coaching with data-driven insight—like adaptive practice and diagnostic assessments—help families invest time and money wisely.

For example, Sparkl’s personalized tutoring approach offers 1-on-1 guidance and tailored study plans that focus on a student’s most impactful gaps. When families use diagnostic results to build a focused plan, they often see better time-to-improvement than with broad, unfocused prep.

Practical tools you can use right now

Here are quick, no-nonsense resources and tactics for parents and students who want to evaluate SAT claims:

- Ask for the original report or data file; don’t accept a screenshot alone.

- Request clarification on whether the statistic is from school-day testing, weekend testing, or a different assessment entirely.

- Compare with official score reports (from your student’s College Board account) rather than relying on headlines.

- Use simple sanity checks: does the magnitude of change match the sample size and context?

Checklist for parents before altering a student’s application plan

Before changing colleges, dropping essays, or investing in expensive last-minute prep because of a statistic you saw online or in a newsletter, run through this checklist with your student and their counselor.

- Confirm the statistic’s source and population.

- Ask whether the statistic is recent and whether it reflects an actual trend or a single year’s noise.

- Talk to the counselor about holistic admissions factors—scores are important, but colleges consider many elements.

- Use diagnostic testing to decide whether tutoring would likely shift outcomes meaningfully.

- If you pursue tutoring, choose a program that’s diagnostic-first and outlines clear milestones.

How to teach your student healthy data skepticism

Helping teenagers develop a skeptical but curious mindset is a gift. Teach them to always ask: who made this claim, who benefits if it’s believed, and what would I want to see to be convinced? Make it a small family habit to ask follow-up questions before forwarding alarming stats to others—your household can become the community’s fact-checkers.

Short parent scripts to de-escalate panic

- “Let’s pull the original data before we change our plans.”

- “We’ll check with the counselor and the College Board report—then decide.”

- “If we need tutoring, let’s start with a diagnostic and a short trial focused on weaknesses.”

Summary: Numbers are powerful—handle them with care

Fake or misleading SAT statistics spread quickly because they’re emotionally compelling. But with a few questions and a little patience, you (and your student) can separate sensational claims from meaningful signals. Trust authoritative sources for raw data, be skeptical of unlabeled charts, and use diagnostics and targeted support—such as 1-on-1 tutoring and tailored study plans—when the data points to real needs.

Final practical resources and next steps

When you see a statistic that could change your student’s path, pause and use the checklist in this article. Reach out to your counselor for the raw data behind school or district summaries. If you decide extra help is warranted after a diagnostic review, look for tutoring that is personalized and measures progress—not one-size-fits-all promises. Personalized tutoring that includes expert tutors, AI-driven insights, and measurable milestones can make targeted practice more effective and less stressful for families.

A final note to parents

You are an essential advocate for your student—asking clarifying questions, protecting them from panic, and guiding smart, evidence-based decisions. The right response to a statistic is rarely immediate alarm; it’s a thoughtful check, a conversation with a counselor, and, if needed, focused action. With curiosity and the right tools, misleading numbers won’t derail your student’s college journey—they’ll be just another piece of information to weigh alongside the essay, transcript, and recommendation letters.

Take a breath, ask the five verification questions, and remember: good data and good guidance win, every time.

No Comments

Leave a comment Cancel